Built for Builders, by Builders – The Local AI Revolution Starts Here

AI agents are no longer a novelty — they’re an essential part of how DevOps teams automate, monitor, and respond. At the center of this evolution is OpenAI’s ChatGPT. But as we scaled its usage at AiOpsOne®, a deeper need surfaced: autonomy.

Not just intelligent outputs, but self-hosted intelligence — private, tweakable, and built for real-world production environments.

This guide is for engineers, SREs, and AI-native builders who want the power of ChatGPT, but with full local control.

🧠 Why Local AI in 2025?

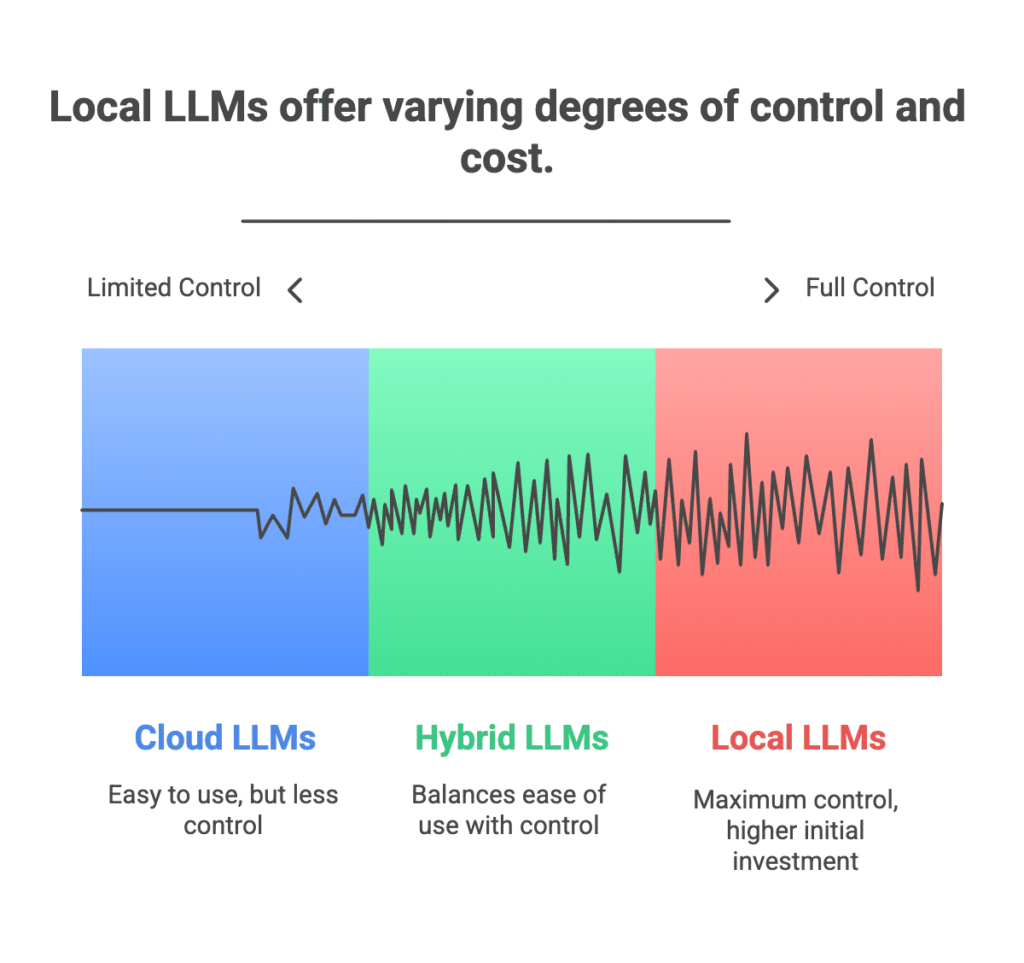

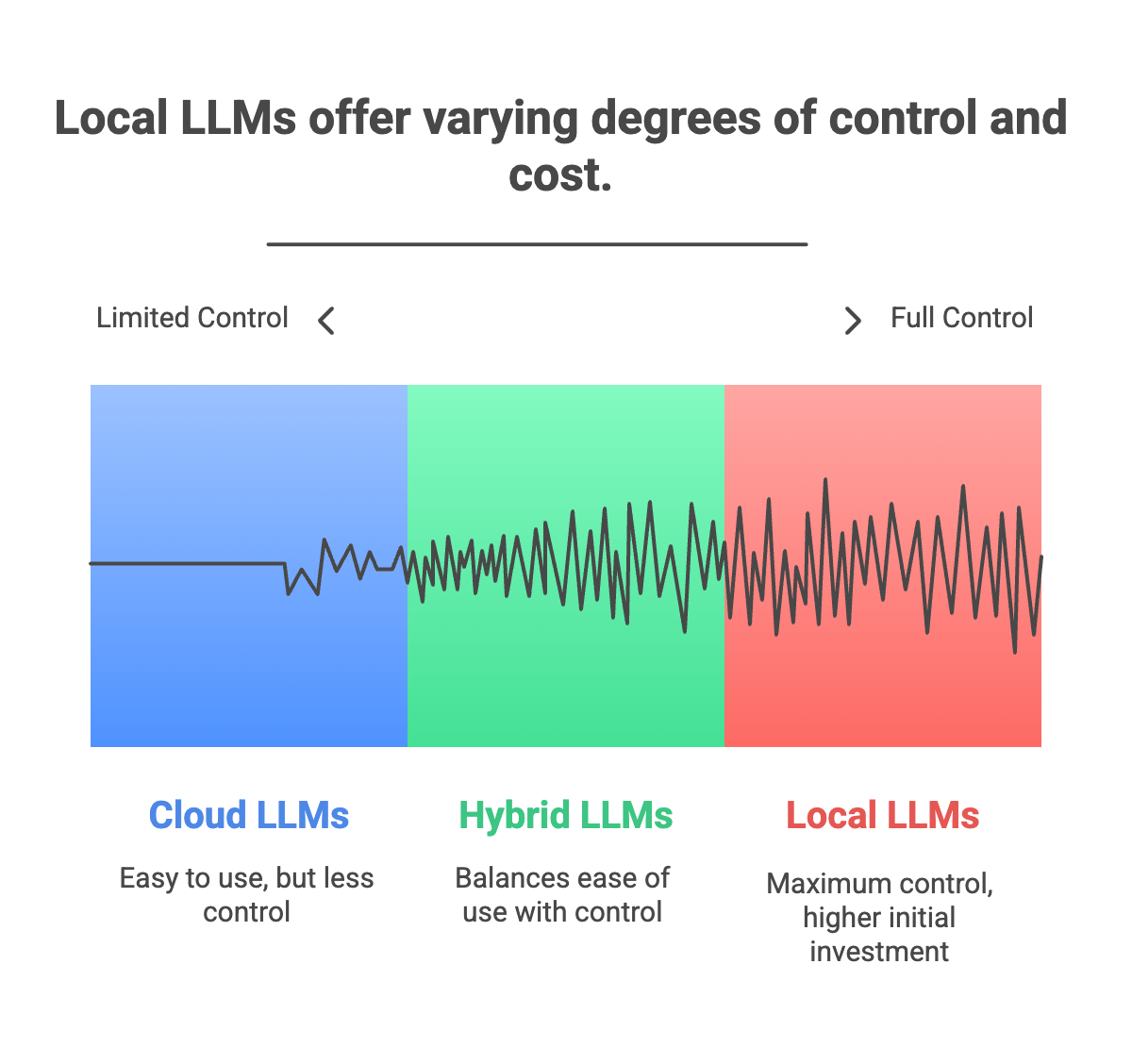

Sure, cloud models are convenient. But here’s why we at AiOpsOne® began moving our stack local-first:

- Zero Data Leakage: Full isolation. No external API calls. Data stays behind your firewall.

- Security by Default: Ideal for handling infrastructure logs, compliance workloads, or customer data.

- Always Available: Whether you’re deploying in a submarine or a datacenter, your AI works — offline.

- Hackable and Auditable: No black boxes. Just clean code you can inspect, fork, and fine-tune.

- Cloud Cost Savings: If you’re running prompts at scale, local LLMs crush API pricing.

🛠 The 10 Best Local AI Chatbots for Engineers & Builders

After extensive testing across infrastructure workflows, agent pipelines, and day-to-day coding tasks, here are our top picks:

1. Ollama + LLaMA / Mistral / Gemma

🔥 Think “Docker for LLMs”

AiOpsOne Use Case: Embedding LLaMA 3 as the core of an on-prem observability copilot.

2. LM Studio

💡 GUI-first, no terminal required

AiOpsOne Use Case: Run internal “documentation agents” for offline SOP queries.

3. LocalAI

🌐 https://github.com/go-skynet/LocalAI

🧩 A drop-in replacement for OpenAI APIs

AiOpsOne Use Case: Powering internal microservices with local GPT-compatible endpoints.

4. Text Generation Web UI (Oobabooga)

🌐 https://github.com/oobabooga/text-generation-webui

⚙️ The playground for tinkerers

AiOpsOne Use Case: Testing multi-agent chains in a local, flexible sandbox.

5. PrivateGPT

🌐 https://github.com/imartinez/privateGPT

📁 Chat with your files — totally offline

AiOpsOne Use Case: Run internal documentation Q&A agents in air-gapped environments.

6. GPT4All

🧠 Beginner-friendly, multi-platform LLM tool

AiOpsOne Use Case: Setup a quick offline AI assistant for DevOps playbooks and incident triage.

7. Jan (TerminalGPT)

🌐 https://github.com/adamyodinsky/TerminalGPT

💻 A local-first, beautifully-designed macOS AI assistant

AiOpsOne Use Case: Lightweight CLI assistant for local test environments and scripting help.

8. Hermes / KoboldAI Horde

🌐 https://github.com/KoboldAI/KoboldAI-Client

📝 Built for storytelling — adapted by devs

AiOpsOne Use Case: Building role-play simulation agents for security incident response.

9. Chatbot UI + Ollama Backend

🌐 https://github.com/mckaywrigley/chatbot-ui

🧱 Your private ChatGPT clone

AiOpsOne Use Case: Internal “agent portal” for running infrastructure prompts securely.

10. Gaia by AMD

🌐 (Coming Soon via AMD)

🔗 Native AI for Windows + Ryzen AI

AiOpsOne Use Case: A fully local desktop agent that runs on internal laptops, ready for on-site ops.

🧭 Where AiOps is Headed

Self-hosted AI isn’t just about independence — it’s about resilience. In a world of increasing cloud uncertainty, data regulation, and edge computing, running your own LLM is a strategic advantage.

At AiOpsOne®, we’ve integrated these tools into:

- CI/CD pipelines for intelligent test case generation

- Real-time monitoring agents

- Offline compliance documentation analyzers

- AI copilots for terminal and Kubernetes workflows

💬 "Local AI is not a fallback — it’s the foundation of intelligent infrastructure."

🧪 Try It. Fork It. Own It.

These aren’t just ChatGPT alternatives. They’re infrastructure-grade agents for teams who build, debug, and deploy at scale. Choose one, get it running, and unlock a new era of offline-first DevOps intelligence.

The future of AI isn’t just smart — it’s self-hosted. And it’s yours.